Recent Link Classification on Temporal Graphs Using Graph Profiler

Cash App customers can interact with each other and with merchants in various ways. They can send payments to one another, gift stocks, purchase and send Bitcoin, or buy products using their Cash App Card. These interactions create a constantly evolving transaction graph.

To ensure Cash App remains safe for our customers, we employ advanced machine learning techniques to anticipate the safety of transactions. Using state-of-the-art machine learning approaches, we do not only adopt the latest innovations, but also contribute to advancing the field. In this post, we will share an overview of one of our latest papers published in the Transactions on Machine Learning Research. An overview of our research contributions are as follows:

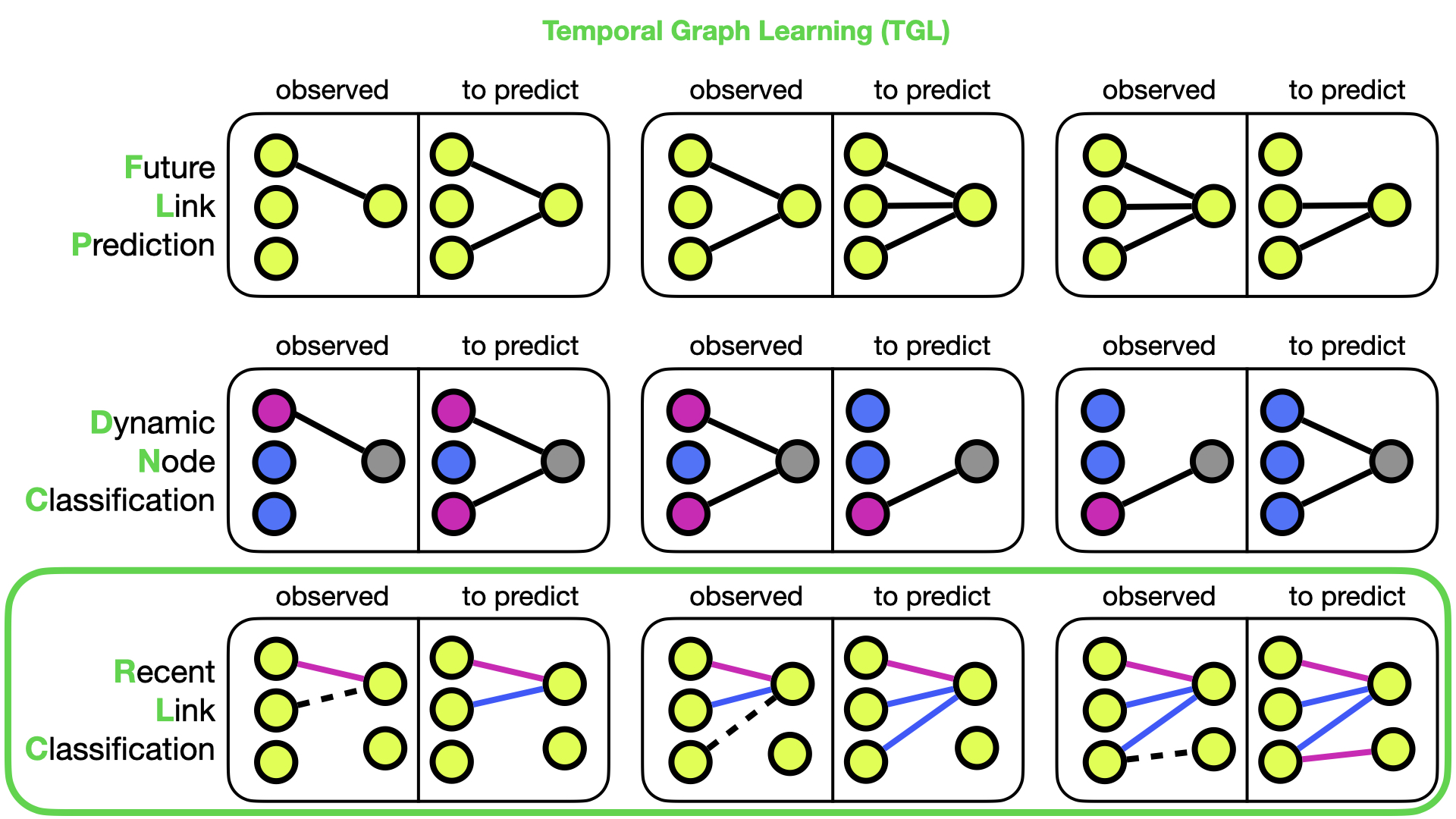

- Formalization of RLC: We establish Recent Link Classification (RLC) as a distinct task in TGL, providing benchmark datasets to facilitate research and development in this area.

- Evaluation Metrics: We emphasize the importance of using the Matthews Correlation Coefficient (MCC) alongside traditional metrics like average precision and area under the curve. MCC is particularly robust for imbalanced datasets, which are common in real-world scenarios.

- Design Principles: Our paper outlines several principles for designing models tailored to RLC tasks, including modifications in message aggregation, readout layers, and time encoding strategies.

- Graph Profiler: We propose, Graph Profiler, which encodes class information from previous events on source and destination nodes, significantly improving performance on benchmark datasets.

What is Recent Link Classification?

Because of the constantly changing transaction graph, Temporal Graph Learning (TGL) is one of our focal research areas. There are two common benchmark downstream tasks in TGL: Future Link Prediction (FLP), which refers to the task of predicting whether two nodes will be connected in the future, and Dynamic Node Classification (DNC), which is about predicting whether the class of nodes will change. Within the scope of this work, we’ve defined a new task called Recent Link Classification (RLC), which focuses on link classification within temporal graphs on the settings that the edge labels are delayed. This task is inspired by detection of unhealthy transactions on financial networks.

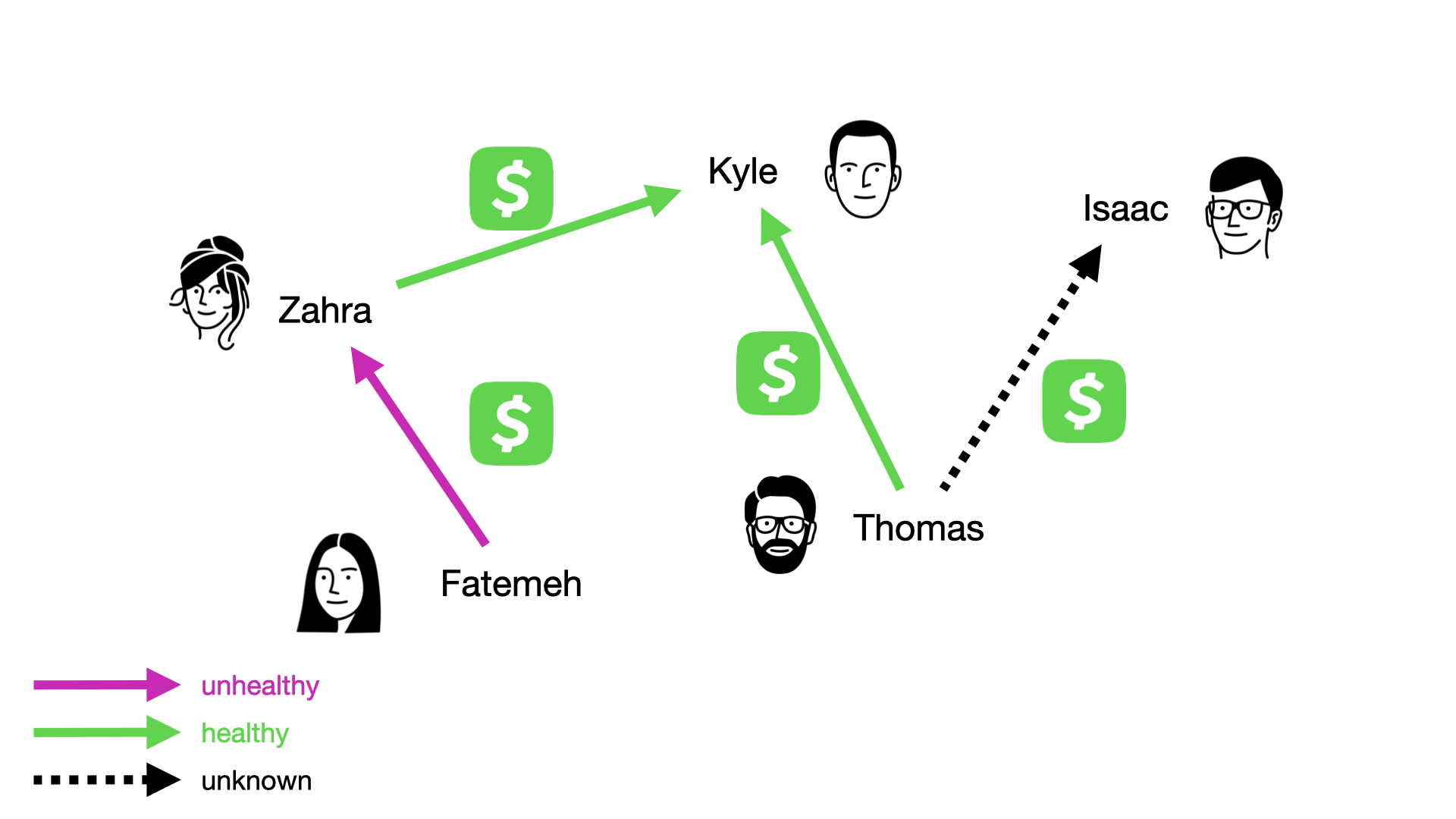

Let’s consider a transaction graph $\mathcal{G} = (\mathcal{V}, \mathcal{E}_{<t})$, where the set of customers is given by;

\[\mathcal{V} = \{\text{Zahra}, \text{Kyle}, \text{Fatemeh}, \text{Thomas}, \text{Isaac} \},\]and the set of transactions until $t$ is by;

\[\mathcal{E}_{<t} = \left\{\begin{matrix} &( &\text{Zahra}, &\text{Kyle}, &\$100, & \text{Jan 1st}, & \text{healthy} &)\\ &( &\text{Fatemeh}, &\text{Zahra}, &\$50, & \text{Jan 1st}, & \text{unhealthy} &)\\ &( &\text{Thomas}, &\text{Kyle}, &\$300, & \text{Jan 1st}, & \text{healthy} &)\\ \end{matrix} \right\},\]such that a transaction (src, dst, msg, t, y) from sender src to receiver dst at time t in the amount of msg is labeled by y.

Let’s assume on Jan 2nd, Thomas sent $100 to Isaac, i.e., src = Thomas, dst = Isaac, msg = $100, t = Jan 2nd. The aim in Recent Link Classification (RLC) is to learn a classifier that can predict whether this new transaction is healthy or unhealthy given $\mathcal{G} = (\mathcal{V}, \mathcal{E}_{<t})$. Now let’s go over an overview of model architecture we propose for this task, Graph Profiler.

Graph Profiler Architecture

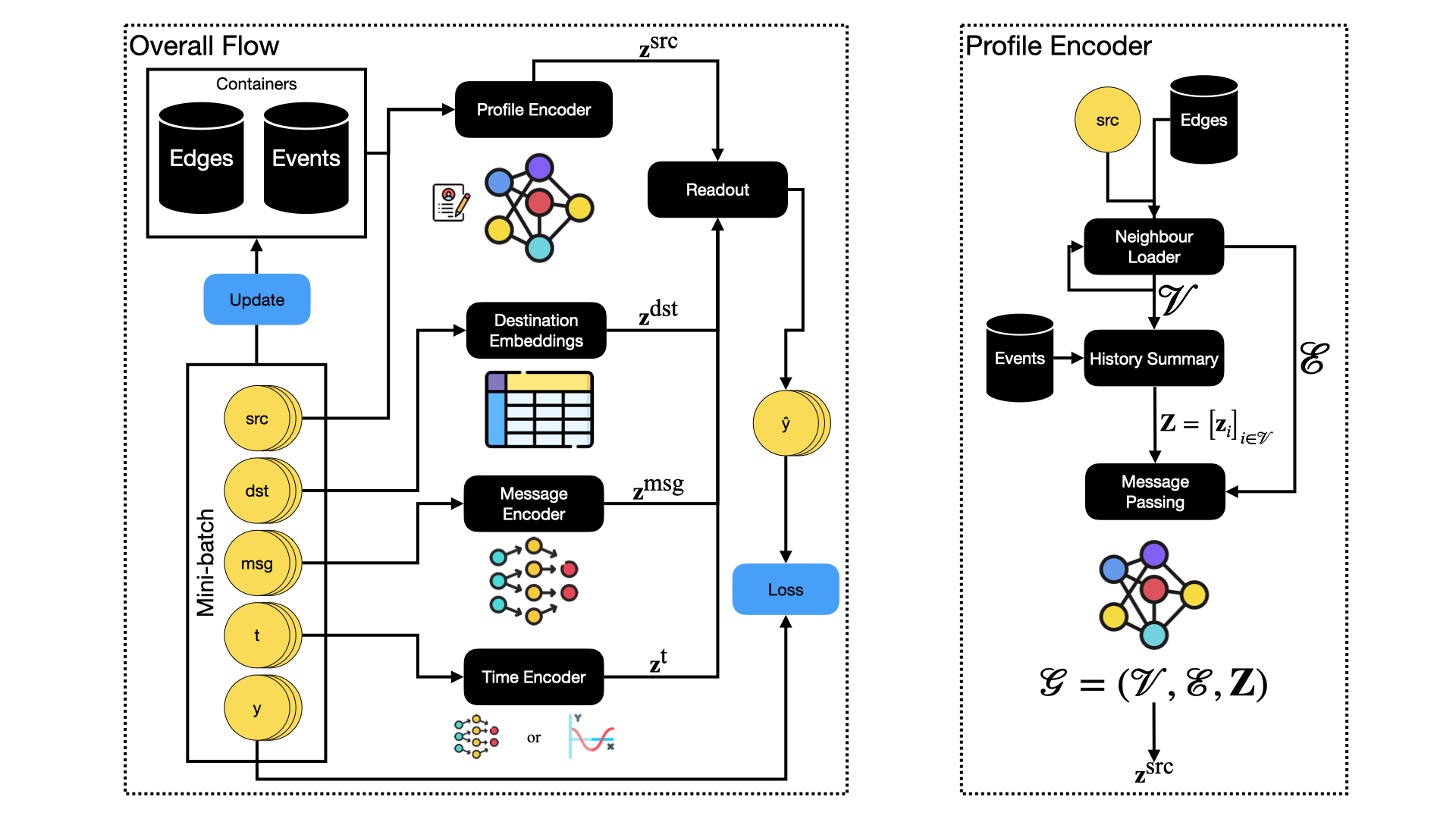

Graph Profiler is designed to maintain dynamic profiles of nodes by incorporating historical interaction data. This approach allows for the creation of time-aggregated representations that capture long-term preferences and behaviors of entities. The architecture includes:

- Profile Encoder: Aggregates messages from past interactions to update node profiles.

- Message Passing: Facilitates communication between nodes to propagate information.

- Time Encoding: Incorporates temporal aspects to ensure the model adapts to evolving graph structures.

- Readout Layer: Generates final predictions based on the aggregated information.

Given a mini-batch of interactions, where each interaction is from a source node src to a destination node dst, observed at time t, associated with features msg and belongs to class y, Graph Profiler follows these steps:

- The profile encoder calculates source node embeddings $\mathbf{z}^{\text{src}}$ by (1) loading the neighborhood of

srcgiven the current set of edges, (2) summarizing the history of all nodes that belong to the neighborhood ofsrcusing past events storage, and (3) updating the node embeddings on the ego graph ofsrcusing message passing. - The identity of

dstis used to retrieve the embedding of the destination node from the table of destination embeddings $\mathbf{z}^{\text{dst}}$. - The message encoder performs a linear transformation on the edge features to obtain $\mathbf{z}^{\text{msg}}$.

- The time encoder projects timestamp

tinto a time embedding $\mathbf{z}^{\text{t}}$. - The readout layer combines source $\mathbf{z}^{\text{src}}$, destination $\mathbf{z}^{\text{dst}}$, message $\mathbf{z}^{\text{msg}}$ and time $\mathbf{z}^{\text{t}}$ embeddings to predict the class of the interaction.

- Afterwards, the containers are updated with the information in the mini-batch.

Next we will list the datasets we use for our experiments of testing various models on this task.

Datasets

We evaluated our methods on four benchmark datasets commonly used in the TGL community: YelpCHI, Wikipedia, Mooc, and Reddit [1, 2]. These datasets, typically used for future link prediction, were adapted for the RLC task. Additionally, we processed two tabular datasets not usually explored in the TGL context: Open Sea and Epic Games [3, 4, 5].

Now we will discover the performance of baselines and proposed method.

Model Comparison Results

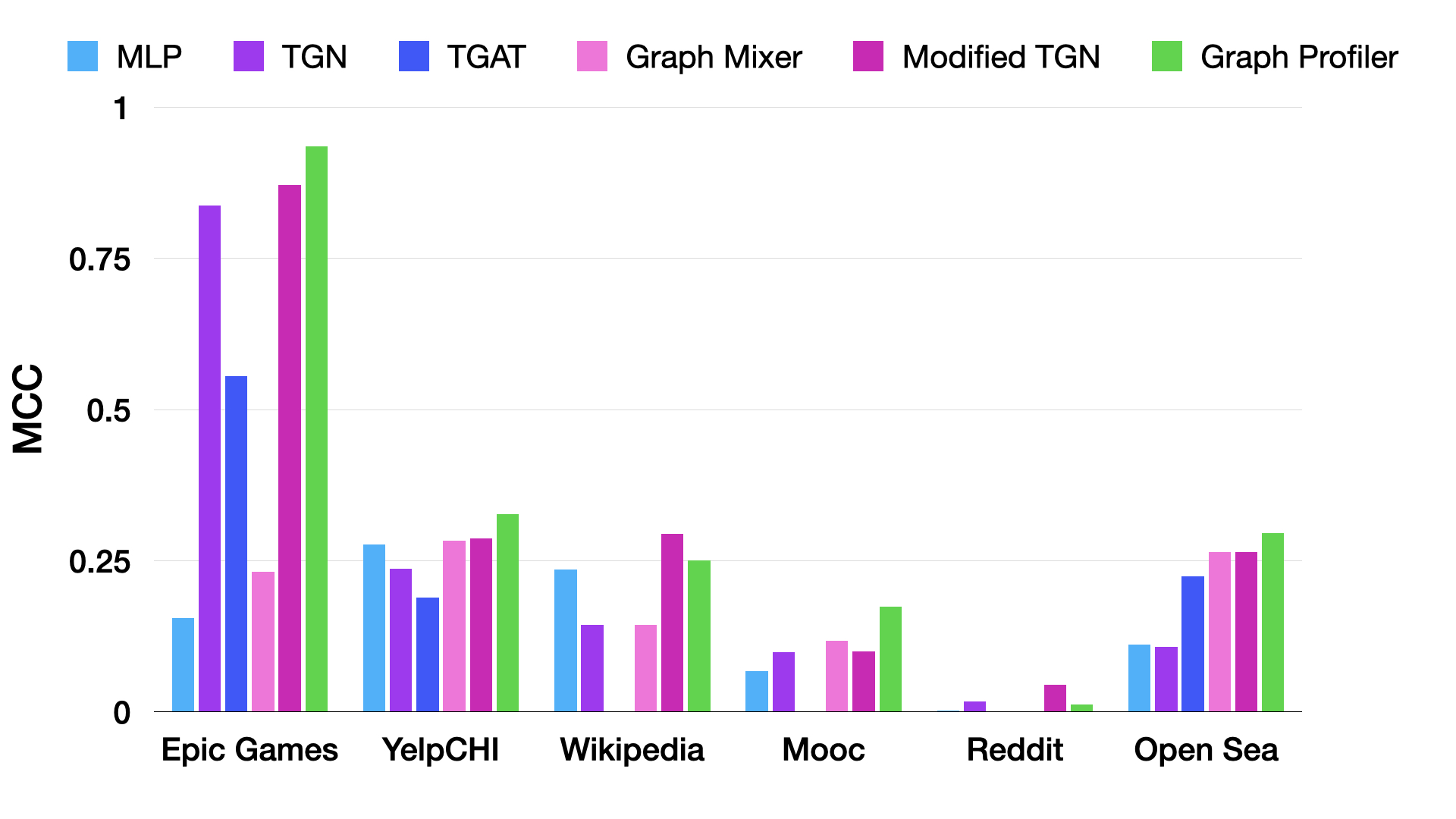

To understand RLC as a new task within TGL, we evaluated a two-layer MLP, TGN [6], TGAT [7], and Graph Mixer [8] on RLC by appropriately modifying these state-of-the-art FLP baselines. Based on their performance, we developed a set of design principles for RLC. Using these principles, we introduced Graph Profiler and benchmarked it on six datasets. For each dataset, we identified the optimal design parameters and analyzed how they relate to the dataset’s underlying dynamics. The experiments conducted demonstrate that Graph Profiler achieves superior performance in terms of the MCC, across various benchmark datasets. This highlights the model’s robustness and effectiveness in handling imbalanced data and delayed label availability.

Next section provides a hint of design principles that differentiate RLC from FLP.

On the Importance of Hyperparameter Sensitivity Differences between FLP and RLC

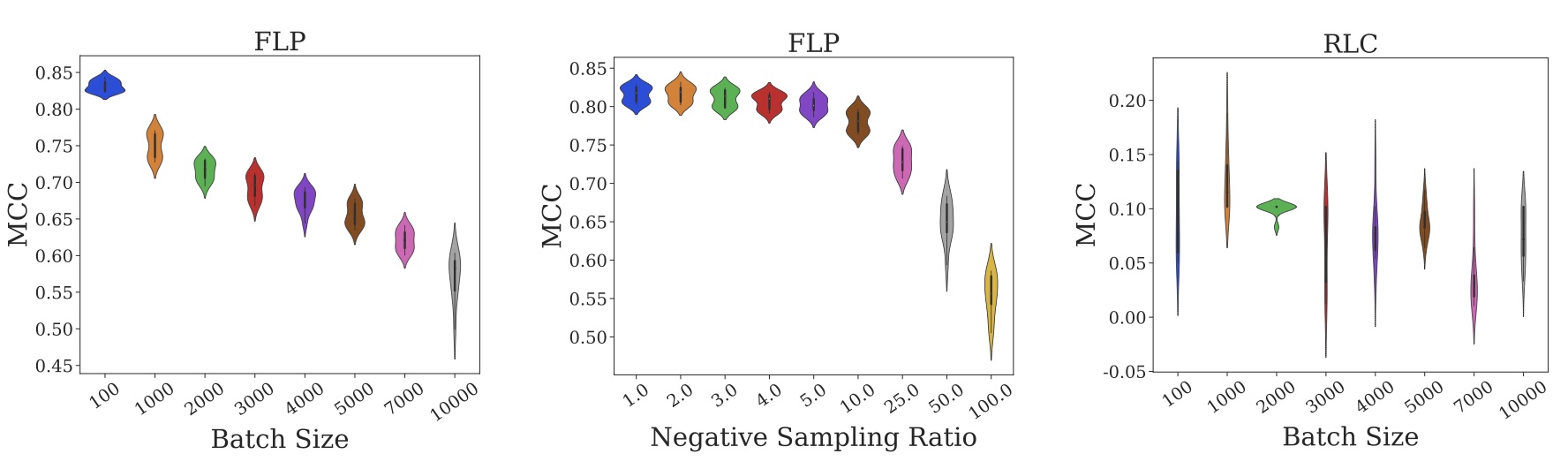

In our reproduction study, we examined the effects of negative sampling ratio and batch size on our TGN baseline for the FLP task. These factors, which we call non-architectural parameters, influence training but not the model architecture.

For batch size, increasing it typically maximizes GPU utilization, but we found a steady decline in MCC as batch size increased, indicating a significant trade-off with implications for production. This decline is due to less frequent gradient updates. For the negative sampling ratio, we observed a slight decline in MCC and reduced consistency between training runs as the number of negative samples increased.

Our findings suggest that RLC does not depend on batch size or require negative samples like FLP does. Therefore, assumptions made for FLP models may not apply to RLC, and directly translating TGL methods from FLP to RLC in industrial settings is not advisable. Moreover, RLC should be considered a distinct benchmark task for the TGL community.

Conclusion

The introduction of RLC as a benchmark task for TGL, along with the development of Graph Profiler, marks a significant step forward in temporal graph learning. This work not only fills a crucial gap in the research landscape but also provides practical tools and methodologies for improving classification tasks in dynamic and complex networks.

References

[1] Yingtong Dou, Zhiwei Liu, Li Sun, Yutong Deng, Hao Peng, and Philip S Yu. Enhancing graph neural network-based fraud detectors against camouflaged fraudsters. In Proc. ACM Int. Conf. Information and Knowledge Management, 2020.

[2] Srijan Kumar, Xikun Zhang, and Jure Leskovec. Predicting dynamic embedding trajectory in temporal interaction networks. In Proc. ACM SIGKDD Int. Conf. Knowledge Discovery and Data Mining, 2019.

[3] Lucio La Cava, Davide Costa, and Andrea Tagarelli. Sonar: Web-based tool for multimodal exploration of non-fungible token inspiration networks. In Proc. ACM SIGIR, 2023.

[4] Lucio La Cava, Davide Costa, and Andrea Tagarelli. Visually wired nfts: Exploring the role of inspiration in non-fungible tokens. CoRR abs/2303.17031, 2023.

[5] Davide Costa, Lucio La Cava, and Andrea Tagarelli. Show me your nft and i tell you how it will perform: Multimodal representation learning for nft selling price prediction. In Proc. ACM Web Conf., 2023.

[6] Emanuele Rossi, Ben Chamberlain, Fabrizio Frasca, Davide Eynard, Federico Monti, and Michael Bronstein. Temporal graph networks for deep learning on dynamic graphs. In Proc. Int. Conf. Learning Representations (ICLR) Graph Representation Learning Workshop, 2020.

[7] da Xu, Chuanwei Ruan, Evren Korpeoglu, Sushant Kumar, and Kannan Achan. Inductive representation learning on temporal graphs. In Proc. Int. Conf. Learning Representations (ICLR), 2020.

[8] Weilin Cong, Si Zhang, Jian Kang, Baichuan Yuan, Hao Wu, Xin Zhou, Hanghang Tong, and Mehrdad Mahdavi. Do we really need complicated model architectures for temporal networks? In Proc. Int. Conf. Learning Representations (ICLR), 2023.